Google has just released Gemini Robotics-ER 1.5

It is a vision-language model (VLM) that brings Gemini’s agentic capabilities to robotics. It’s designed for advanced reasoning in the physical world, allowing robots to interpret complex visual data, perform spatial reasoning, and plan actions from natural language commands.

Enhanced autonomy – Robots can reason, adapt, and respond to changes in open-ended environments.

Natural language interaction – Makes robots easier to use by enabling complex task assignments using natural language.

Task orchestration – Deconstructs natural language commands into subtasks and integrates with existing robot controllers and behaviors to complete long-horizon tasks.

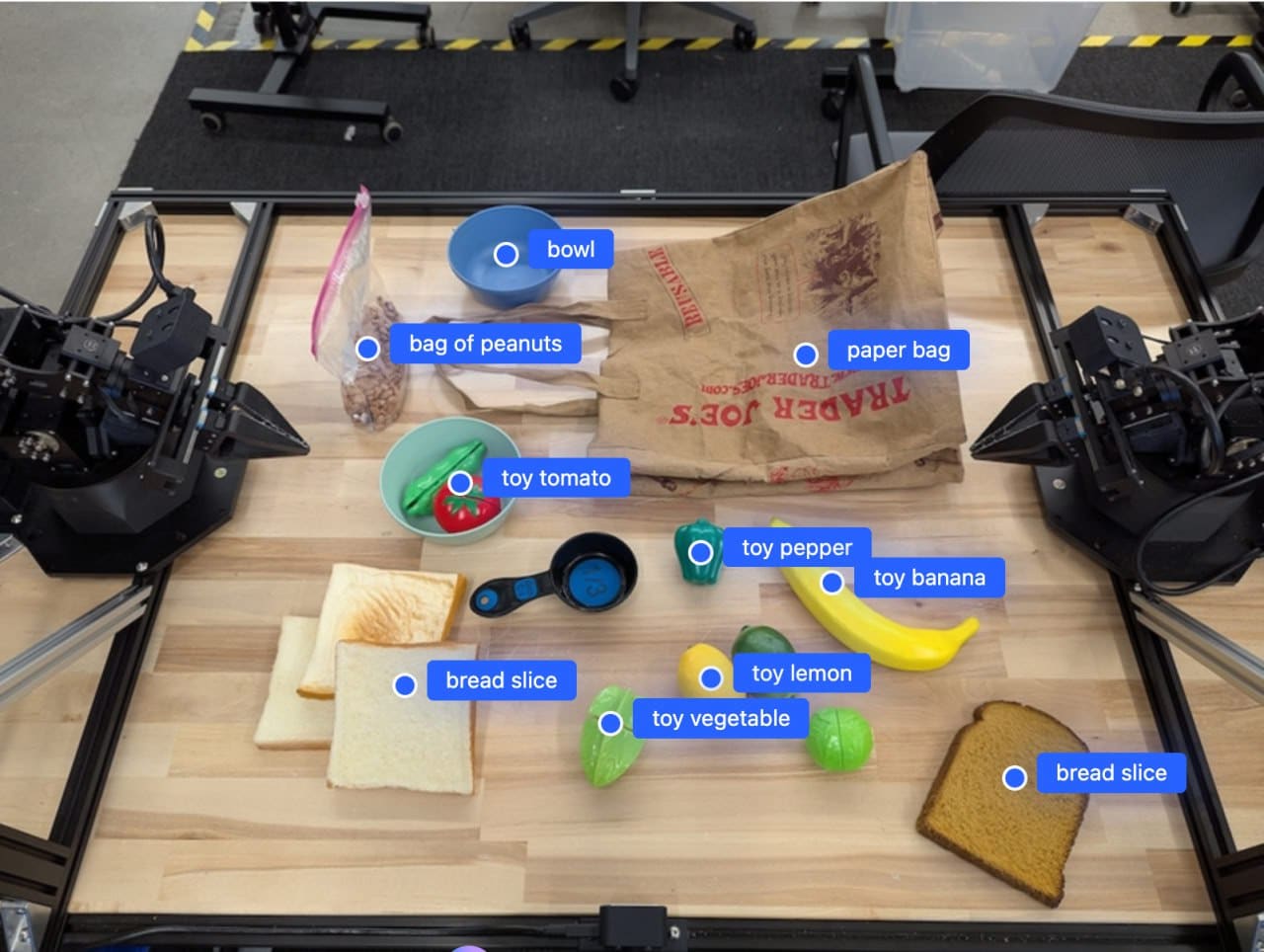

Versatile capabilities – Locates and identifies objects, understands object relationships, plans grasps and trajectories, and interprets dynamic scenes.